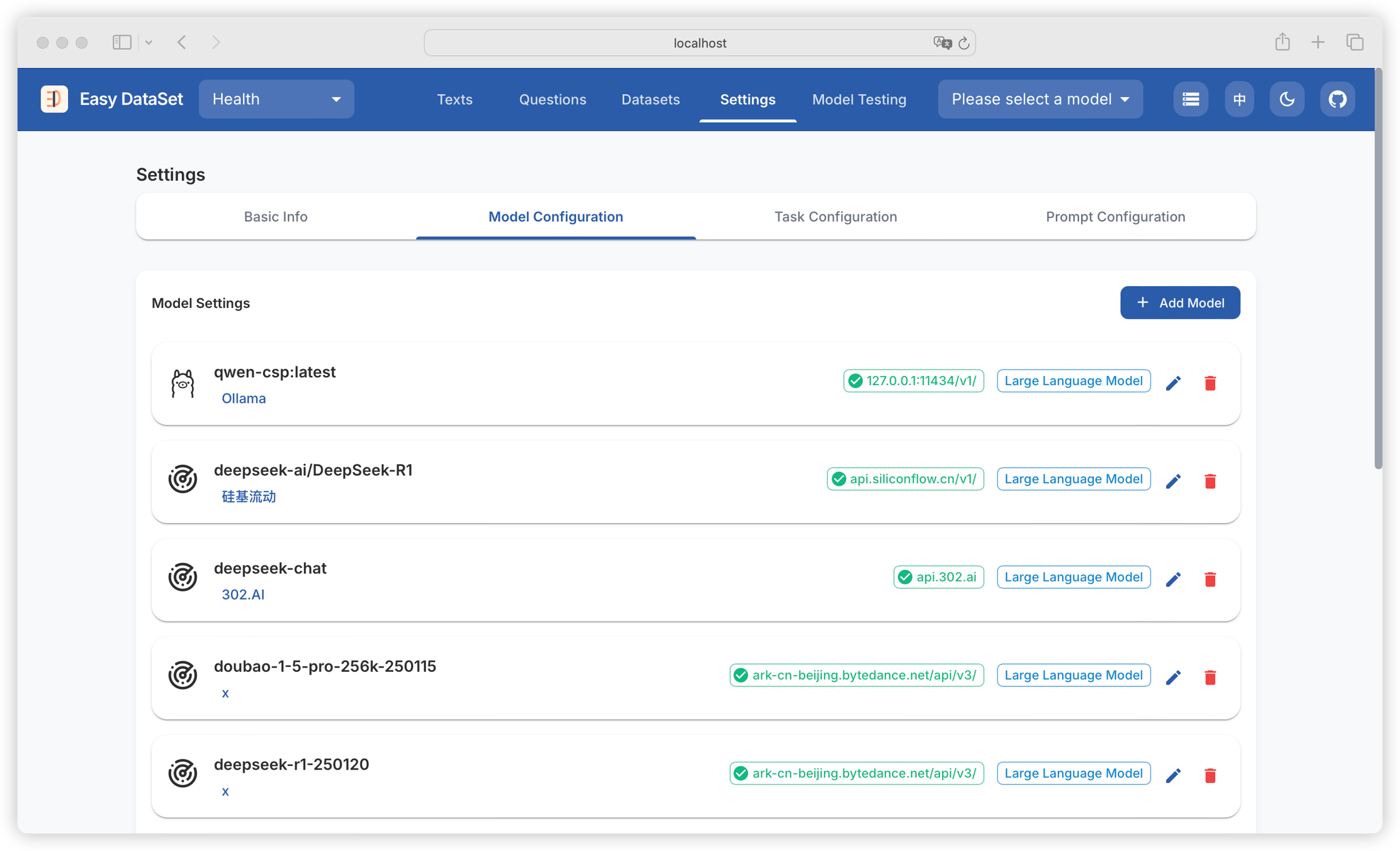

Model Configuration

This module is used to configure the large models needed for subsequent literature processing, dataset construction, and other functions, including text models and vision models.

Currently, the platform has built-in some model providers by default. You only need to fill in the corresponding key for the model provider:

ollama

Ollama

http://127.0.0.1:11434/api

openai

OpenAI

https://api.openai.com/v1/

siliconcloud

Silicon Flow

https://api.ap.siliconflow.com/v1/

deepseek

DeepSeek

https://api.deepseek.com/v1/

302ai

302.AI

https://api.302.ai/v1/

zhipu

Zhipu AI

https://open.bigmodel.cn/api/paas/v4/

Doubao

Volcano Engine

https://ark.cn-beijing.volces.com/api/v3/

groq

Groq

https://api.groq.com/openai

grok

Grok

https://api.x.ai

openRouter

OpenRouter

https://openrouter.ai/api/v1/

alibailian

Alibaba Cloud Bailian

https://dashscope.aliyuncs.com/compatible-mode/v1

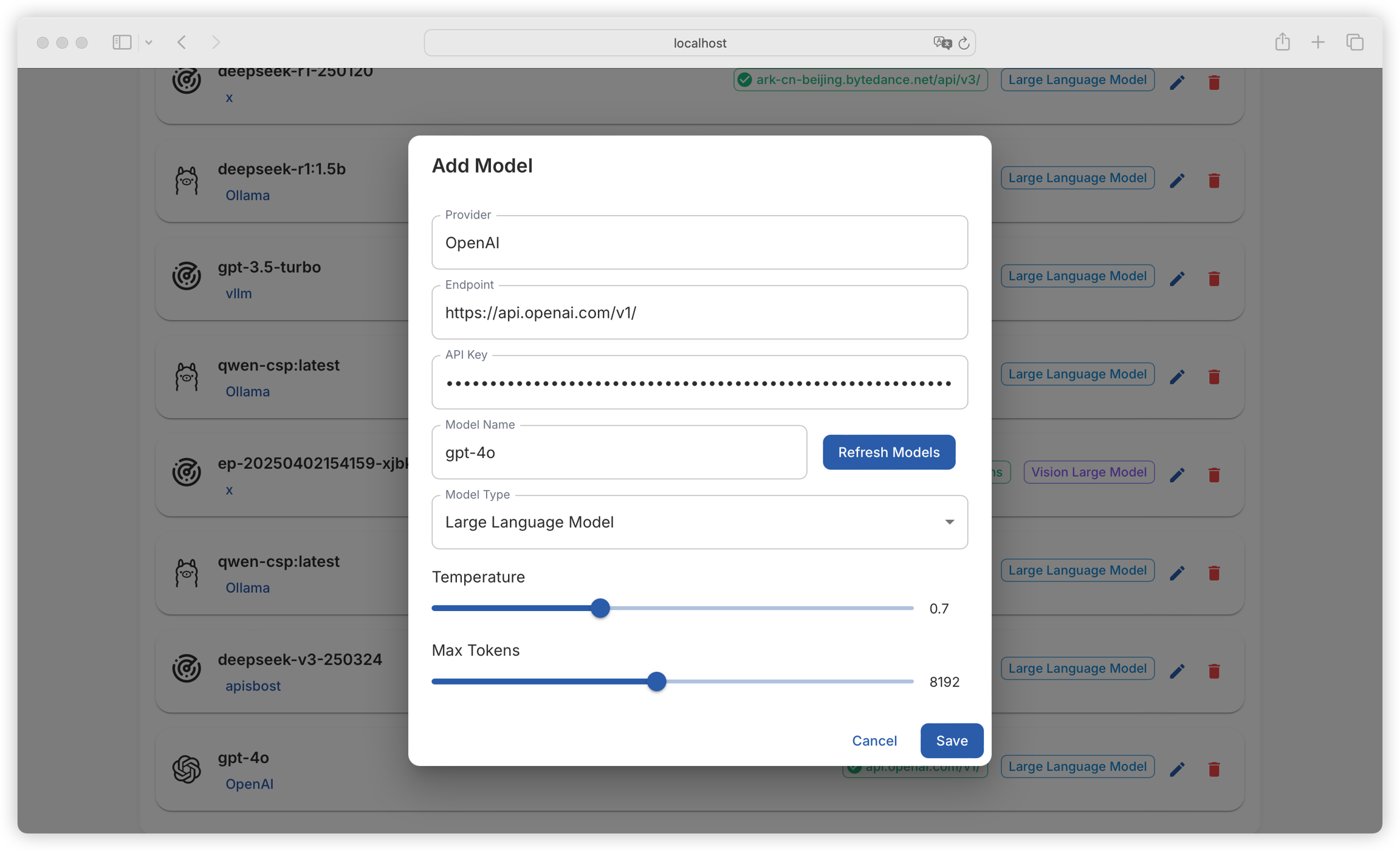

Note: Model providers not in the above list are also supported for configuration. Information such as model provider, API interface address, API Key, and model name all support custom input. As long as the API conforms to the OPEN AI format, the platform can be compatible with it.

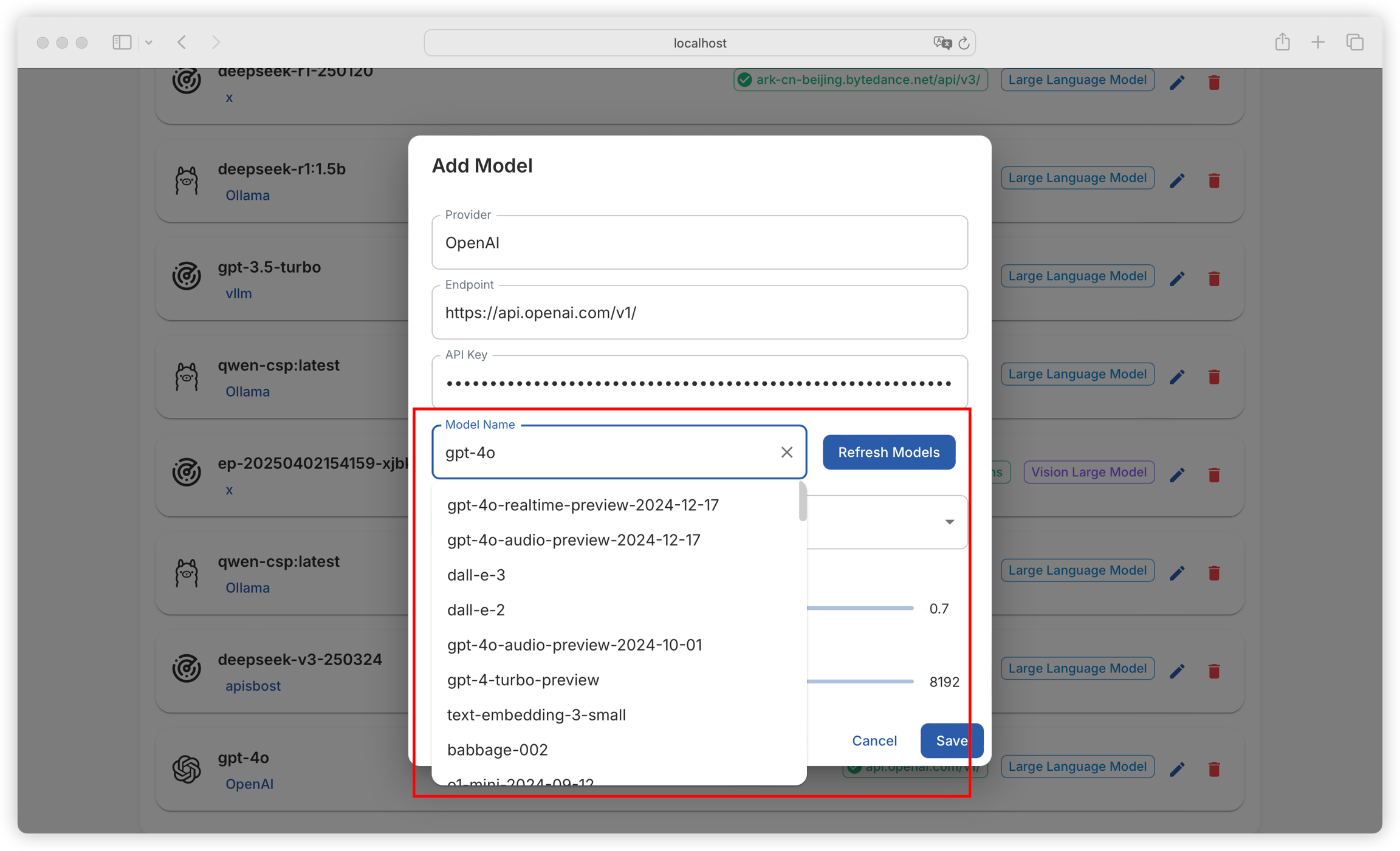

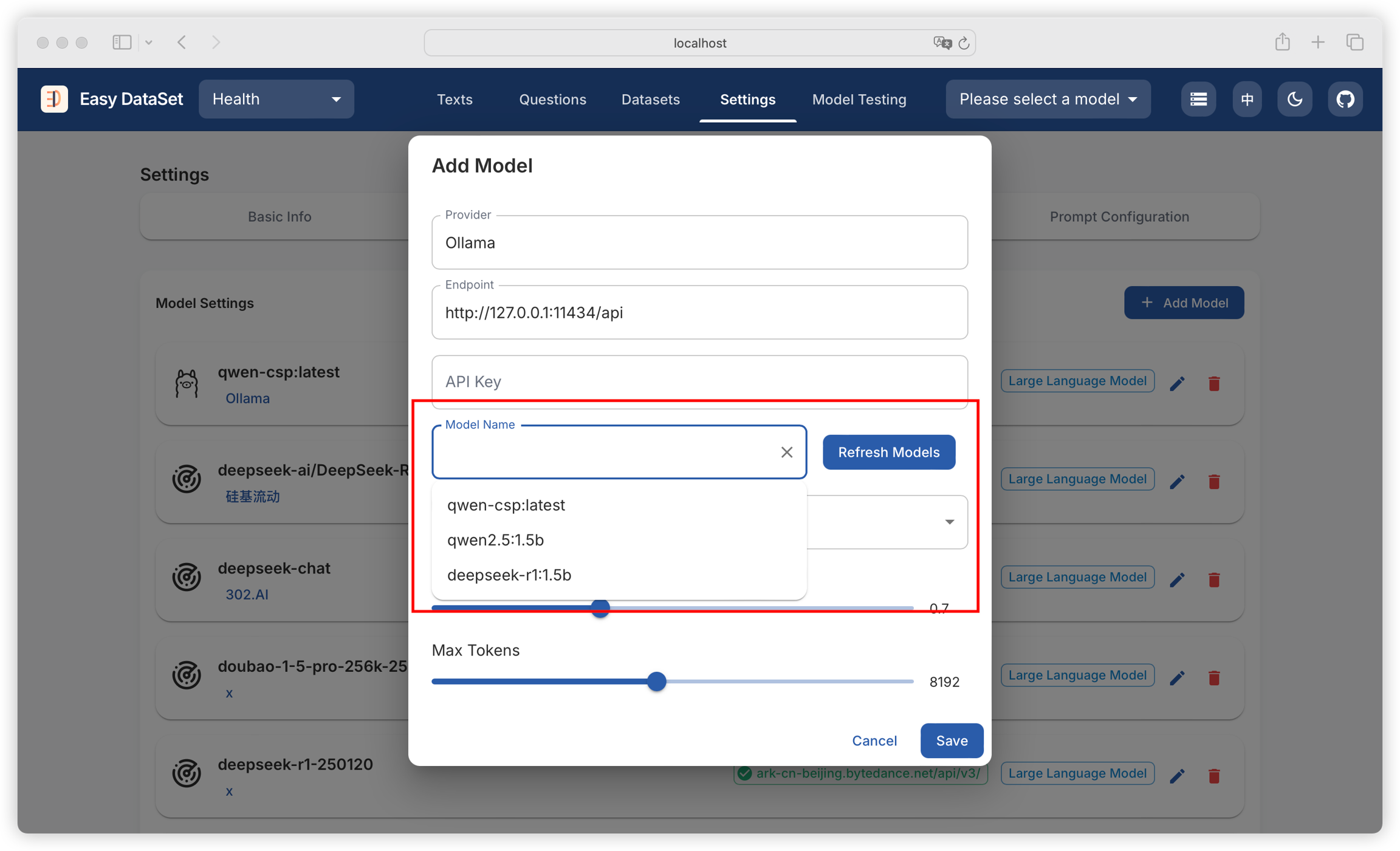

Click Refresh Model List to view all models provided by the provider (you can also manually enter the model name here):

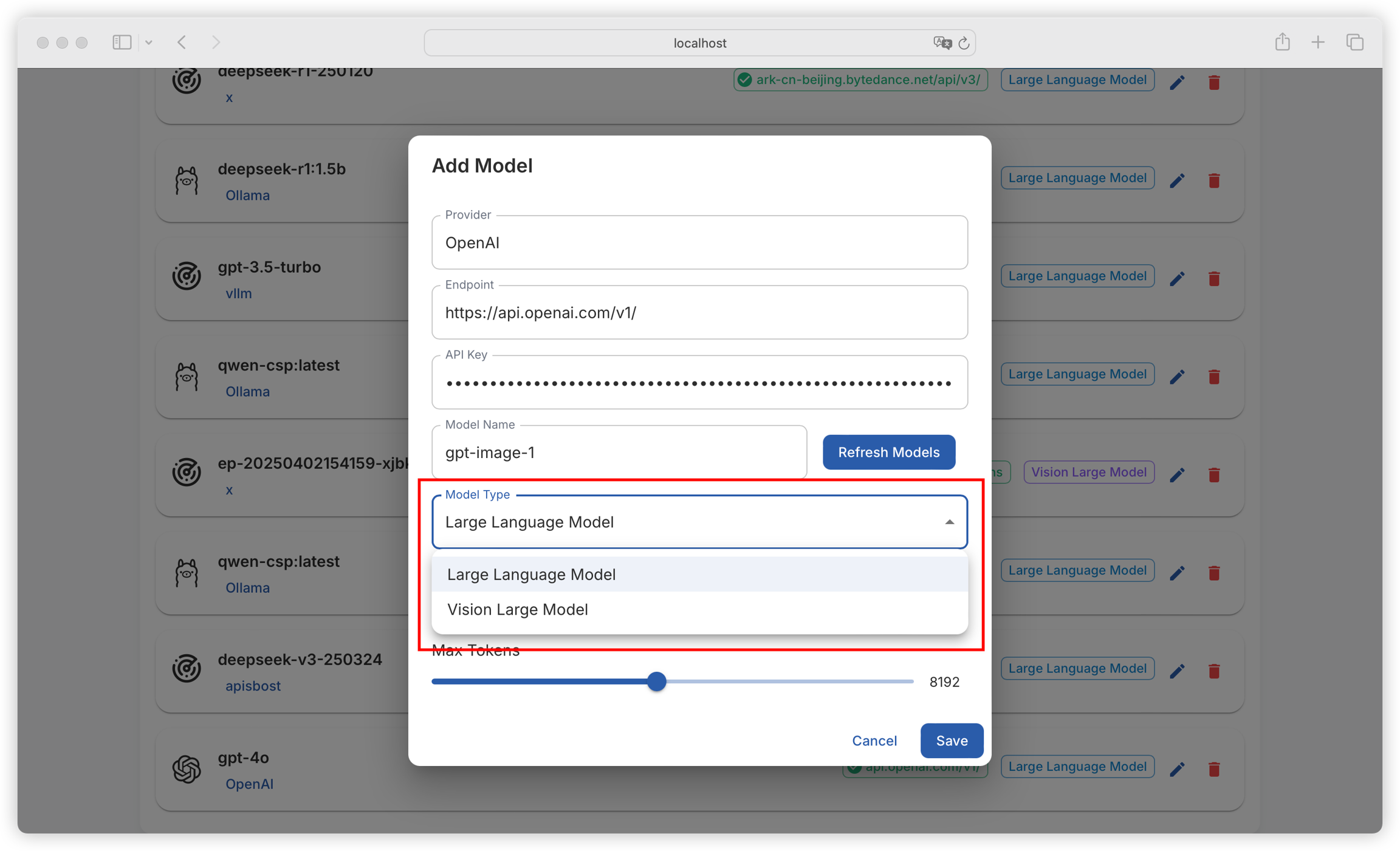

Supports configuration of language models (for text generation tasks) and vision models (for visual analysis tasks):

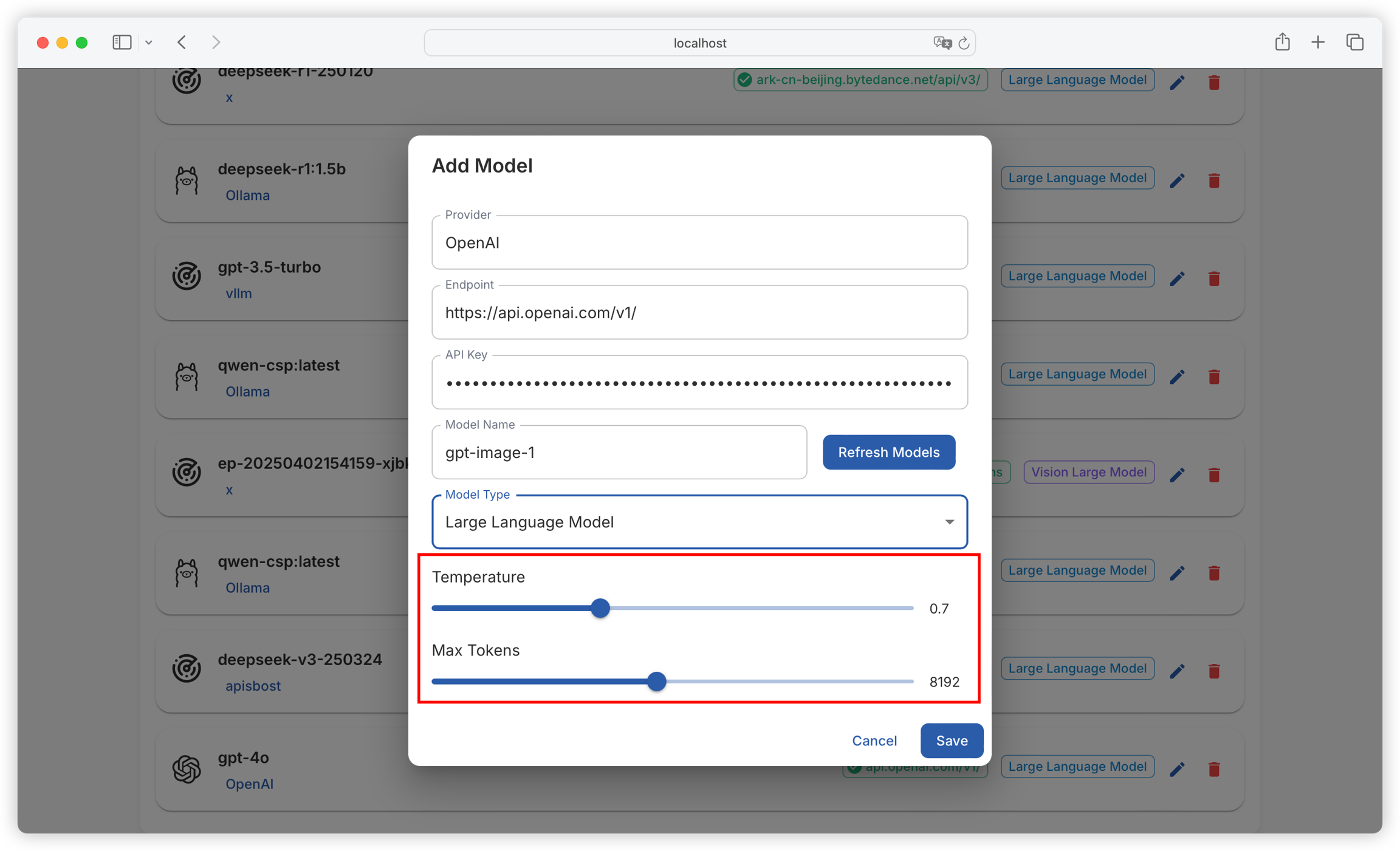

It also supports configuring the model's temperature and maximum output tokens:

Temperature: Controls the randomness of the generated text. Higher temperature results in more random and diverse outputs, while lower temperature leads to more stable and conservative outputs.

Max Token: Limits the length of text generated by the model, measured in tokens, to prevent excessively long outputs.

Supports Ollama, which can automatically fetch the list of locally deployed models:

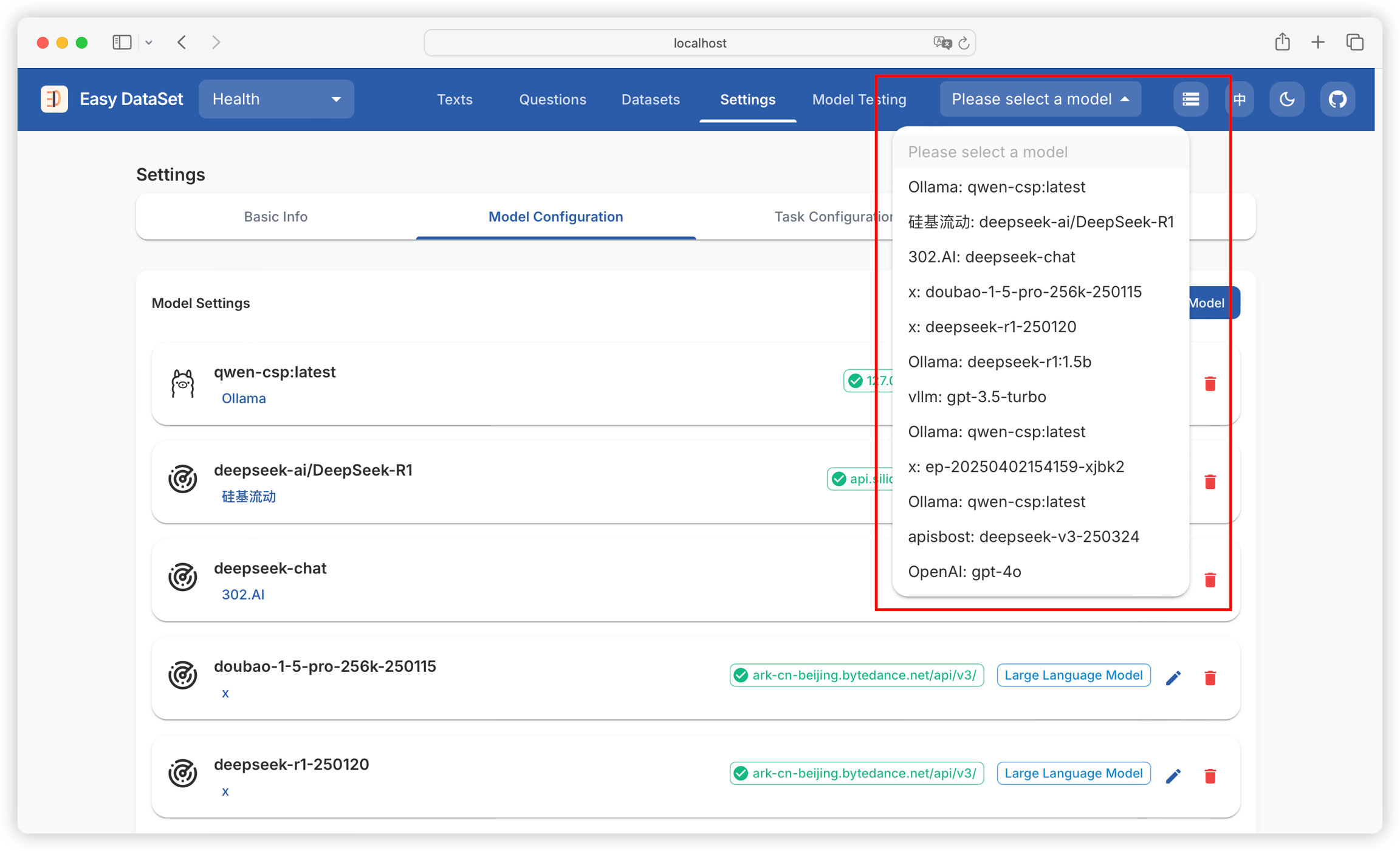

Supports configuring multiple models, which can be switched through the model dropdown box in the upper right corner:

Last updated

Was this helpful?